Italy Bans Chinese Deepseek Ai—Are Our Data And Ethics At Risk?

Italy’s data watchdog, the Garante, has taken a bold step by banning DeepSeek AI, a popular Chinese service that stores user data on Chinese servers. How can a tool, embraced by millions, be allowed when its data practices and ethical standards are so murky?

The ban follows a flurry of questions from the regulator about DeepSeek’s data collection methods—questions that the company’s responses couldn’t satisfactorily answer. With user data governed by Chinese law and little transparency on how it’s handled, Italy’s decision is sparking a major debate about privacy and national security.

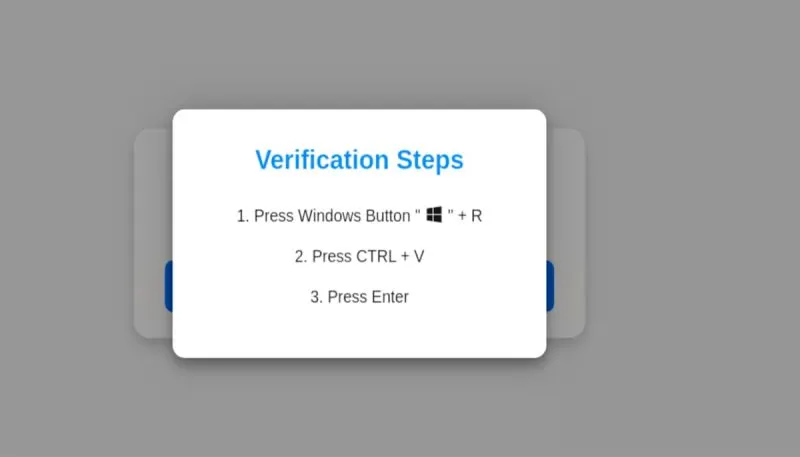

Beyond privacy concerns, DeepSeek’s AI models have been found to be vulnerable to jailbreak techniques that bypass safety filters. Researchers have shown that with the right prompts, the chatbot can generate harmful instructions, from writing malicious code to giving step-by-step guides on dangerous activities. If an AI can be so easily manipulated, should it even be available to the public?

Adding another layer of controversy, security analysts discovered that DeepSeek’s reasoning model, DeepSeek-R1, might have been trained on OpenAI data—raising legal and ethical questions about its originality. Could DeepSeek be using copyrighted or proprietary data without permission? And if so, how did it slip through the cracks?

Italy’s move echoes past regulatory actions, like when the country temporarily banned ChatGPT in 2023 over privacy concerns. OpenAI managed to resolve the issue, but DeepSeek’s case may not be as simple. With its ties to Chinese law and a lack of clear safeguards, will more countries follow Italy’s lead?

As Italy digs deeper with its investigation, one thing is clear: the rush to innovate must be balanced with strict safeguards. The question remains—are we trading convenience for compromised ethics and security?

Comments